1.1. Establishing the work scenarios and performance indicators

Information was gathered from the beneficiary in order to identify the specific operational needs and expected outcomes. This was used to define the solution's architecture and composing modules. Three general directions have been identified: (1) person's identity validation, (2) dissimulated behavior interpretation, and (3) automatic speech interpretation, each of them consisting of several implementation scenarios.

1.2. Analysis of the current legislative framework

We identified the current legislative framework concerning personal data processing and storage. Special data, such as biometrics used for uniquely identifying a person, require the person's agreement. On a general perspective, processing personal data with electronic means with the purpose of monitoring and/or evaluating some personality aspects and processing data from surveillance cameras must be reported to the National Agency of Personal Data Processing Monitoring. Intercepting non-public digital data without having the right to do so is strictly forbidden. We therefore apply these when developing the project.

1.3. Data standards (public and proprietary) and interoperability analysis

We identified the needed target communication and interoperability standards for developing the operational versions of the tools. Most notable are the ONVIF and the NATO-STANAG standards. Apart from those, the non-military formats have been also listed and took into consideration for further developments of the systems.

1.4. State-of-the-art on visual, audio and multimodal data processing and analysis

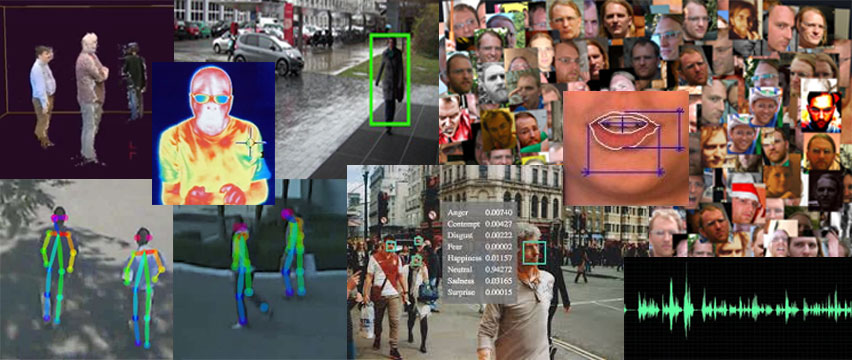

We reviewed the current state-of-the-art in the field and assessed the methods, their capabilities and the publicly available datasets that can be used for development of the techniques and their experimentation, namely: eProfiler - event and action detection and recognition, person re-identification; eSeeming - facial micro-expressions and expressions detection and recognition, behavior and dissimulated behavior detection and recognition; eTalk - speech-to-text, automated lip reading, speaker identification and recognition and audio quality enhancement techniques.

1.5. State-of-the-art on deep learning

We overviewed the current capabilities of deep learning techniques and architectures that can be implemented in the project. Several architectures have been identified as relevant for the project, namely: AlexNet, ZFNet, VGGNet, GoogLeNet, ResNet-152, SqueezeNet, R-CNN, Fast R-CNN, Faster R-CNN, LSTM, GAN, DenseNet, YOLO, RBM, Autoencoders, NADE, DCNN, CMNN, Deep Speech 2. The software tools necessary to operate these networks have also been retrieved.

1.6. Analysis of data security and identity management technologies

We identified the relevant solutions to ensure proper communication security methods for the data streams entering and exiting the system's network and security methods for the information stored in the systems' databases.

1.7. SWOT analysis of the identified relevant algorithms and systems

We run an in-depth SWOT (Strengths, Weaknesses, Opportunities, and Threats) analysis of the selected methods and algorithms from the literature, identifying the best and optimal solutions for the developing systems.

1.8. Analysis of the Centralized Management component for satisfying the open architecture requirements

We identified the proper architecture for ensuring a centralized management and inter-operability with existing systems.

1.9. Analysis of possible work topologies

We identified the proper solutions for the main distributed processing architectures, the available parallel processing technologies and data fusion techniques. Given the overall high complexity of the proposed system, it is important to take full advantage of state-of-the-art Big Data processing techniques. The system will integrate distributed computing solutions using regular PCs and/or Servers (GPU enhanced), as well as for very specific tasks, the advantages of the embedded systems, like the ones integrated with the cameras.

2.1. Study of computational complexity, scalability and concept design

There have been analyzed different methods for estimating the computational complexity and scalability of the algorithms. The system can be easily implemented by ensuring a parallel computational infrastructure (mainly GPU systems) scaled to the required on-the-field data volume, according to the different scenarios.

2.2. Analysis, development and documentation of operational working scenarios

There have been analyzed the ways to implement the operational working scenarios that meet the requirements of the beneficiaries and provide real support for the future design and development of the integrated system. Five operational scenarios have been defined: (i) analyzing crowd behavior in open spaces, (ii) analyzing persons in a waiting room, (iii) analyzing persons during an interview, (iv) identifying unknown persons based on their interactions with objects and other persons, (v) biometric person identification.

2.3. Architecture design for the integrated solution

The project’s solution is an integrated/general one that can receive data from different multi-modal sub-systems and process them using advanced machine learning systems featuring deep learning. To this end, a Video Analytics service has been developed, which will run on an independent platform and will receive data from other sub-systems such as the NVR (Network Video Recorder) system and directly from IP video sources (video surveillance cameras). The NVR (Network Video Recorder) system is used as the main element of video streaming management (storage, handling, video streaming, audio, thermal, infrared) being built around the ONVIF specifications. The VMS (Video Management System) will allow integration of all sub-systems, such as NVR (Network Video Recorder) and Video Analytics engine, ensuring interaction with the rest of the 3rd party sub-systems (e.g., the Control Access system - CA).

2.4. Annotated data for training/validating the algorithms

State-of-the-art annotated datasets have been identified, collected, processed and used to train/validate the algorithms. For most of the main systems, existing datasets were used (eProfiler - 9 datasets, eSeeming - 8 datasets, eTalk - 8 datasets). For particular tasks, both from the operational point of view and from the Romanian language perspective, new datasets were created: data was collected, manually processed and annotated by human operators (2 new datasets for crowd detection and behavioral analysis, 1 new dataset for emotion detection from voice, 2 new datasets for lipreading in Romanian, 2 new datasets for speech processing in Romanian).

2.5. Research, development and implementation of pre-processing techniques

Several pre-processing tricks have been implemented with the objective to enhance the final performance of the algorithms. The adopted techniques range from speech dereversion and additive noise reduction, narrow and wide band noise reduction, signal filtering, normalization, transcoding, body augmentation techniques, geometric and color transformations, detectors (e.g., faces, people, lips), to computational load reduction via frame selection, scaling and cropping.

2.6. Research, development and implementation of event recognition algorithms

Automatic crowd formation detection algorithms and automatic crowd behavior analysis algorithms have been developed with the objective of identifying potential critical situations, such as unwanted events or public incidents. The experimental evaluation was performed using real-life images, achieving an accuracy of up to 80% for the crowd detector and 60% for the behavior analysis.

2.7. Research, development and implementation of action recognition algorithms

Automatic violent behavior detection algorithms have been developed with the objective to identify potential critical situations which require immediate intervention by law enforcement operatives. The experimental evaluation was performed using dedicated annotated datasets (movie sources and Internet recordings) achieving a maximum accuracy of 86%.

2.8. Research, development and implementation of facial expressions recognition and emotion identification algorithms

Automated emotion detection algorithms have been developed based on visual information (images/video) and also on audio (voice) information with the objective of analyzing the suspect behavior of a person (e.g., emotions caused by dissimulated behavior). For the emotion analysis using visual information, the experimental evaluation was performed using simulated annotated datasets, achieving an accuracy of over 80%. For emotion analysis using audio information the experimental evaluation was performed on both simulated and real-world annotated datasets with interrogations, achieving an accuracy of up to 97%.

2.9. Research, development and implementation of speech recognition algorithms and speaker verification for Romanian language

Speaker verification and identification algorithms have been developed. Experimental validation was performed on established annotated datasets, yielding an accuracy of over 95%.

2.10. Research, development and implementation of algorithms for person re-identification

There have been developed algorithms for automatic recognition of a person in a crowd using body features as well as facial features and algorithms for automatic recognition of objects of interest with the general objective of identifying and tracking a suspect and the presence of potentially dangerous objects (e.g., an abandoned luggage). For person recognition via body features, the maximum average accuracy is 82%, for person recognition via facial features, the average accuracy is 62%, while for object recognition the average accuracy is 50%.

2.11. Research, development and implementation of voice-to-text and image-to-text algorithms for Romanian language

Several algorithms for the analysis and transcription of images and sound into text have been developed for Romanian language with the general objective of providing the possibility to identify the words spoken by a person. The goal is to identify the transmitted information. The evaluation was performed on annotated datasets, achieving a maximum accuracy of 57% for image-to-text (in lab conditions), an error of only 6% for speech-to-text, a mere 3% error for word search in read speech and 17% for spontaneous speech.

3.1. Research, development and implementation of algorithms for event recognition using neural networks (TRL4) (stage II)

Crowd detection and crowd behavior analysis systems were tested on various video sequences downloaded from the Internet, using scenarios similar to the ones requested in the project, both from the performance and execution time points of view. In this sense, the algorithms have been finalized, by evaluating and determining the optimal parameters. The proposed systems remain at the same performance, but the processing time is improved, which brings them closer to real-time applications.

3.2. Research, development and implementation of action recognition algorithms using deep neural networks (TRL4) (stage II)

Our proposed violence detection algorithm was augmented with an end-to-end DNN structure, where the differences between consecutive frames are processed by a VGG-19 type network and temporally aggregated through the use of ConvLSTM layers. The final result is obtained through the processing of ConvLSTM outputs by a series of fully connected layers. The updated algorithm obtains better results and ensures real-time processing functionality (more than 30 fps during our tests), which in turn ensures integration into the final solution.

3.3. Research, development and implementation of algorithms for facial expression recognition and emotion identification using convolutional neural networks (TRL4) (stage II)

For the detection of emotions from images, alternative training techniques for convolutional neural networks that are based on many non-annotated images have been investigated. The presented solutions avoid the use of additional classifiers for exploring the unlabeled set. The proposed solution is more time efficient, but the performance is lower than the case when we use additional classifier. The module for recognizing the emotions from video signal (still images or sequence of frames) was optimized from the point of view of the training procedure, the chosen networks architecture, and the hyperparameters. The solution was evaluated on recently introduced databases from the literature.

For speech emotion recognition, performance optimizations were targeted for MLP neural networks and recurrent neural networks using LSTM cells, with the best results being achieved using the former. System robustness maximization was also a target, achieved by training and testing using a new dataset. Additionally, the real time live-action module was developed, audio data acquisition being based on the PortAudio library. The speech emotion recognition module was optimized in regards to the available neural network hyperparameters and validated using some of the best datasets available in literature. In addition to these tasks, algorithms for measuring the heartrate and respiratory rate using photoplethysmography were developed.

3.4. Research, development and implementation of speech recognition and speaker verifying for the Romanian language using neural networks (TRL4) (stage II)

The best speaker recognition model (as of 2018), namely SincNet, was carefully analyzed and updated to produce even better results and to have a smaller memory footprint. More specifically, (i) we used a more compact network trunk that allowed for hyperparameter tuning and proved to be more robust to overfitting and (ii) we evaluated and eventually used traditional speech features (FBANK, MFCC) instead of the sinc features. The model was evaluated in several test scenarios and optimized for performance (hyperparameter tuning).

3.5. Research, development and implementation of person re-identification and object detection algorithms using deep neural networks (TRL4) (stage II)

A series of face and body detection and classification algorithms have been investigated, designed to further improve the proposed system, from both the evaluation metric and for the processing performance. In this context, the ResNet50 network proved to be the most suitable backbone for the proposed system, due to the promising results, and the low resources consumption in comparison with the DenseNet network, having half the depth than the latest.

Four different automatic object detection systems have been analyzed and implemented in order to detect suspicious objects in the scene. The best performing system proved to be Mask R-CNN. It is based on a pyramidal feature extracting backbone architecture, completed with a mask detection network head. The object detection principle is similar to the one used for person detection, but this time it is applied to a set of 80 different classes. The dataset used for training the system is crucial for the high performance of the entire system. Thus, a careful selection of the samples used during training can change the algorithm’s purpose altogether.

3.6. Research, development and implementation of translating algorithms from voice and image to text for Romanian language using neural networks (TRL4) (stage II)

The optimization of the lip reading algorithm also involved normalization and clean-up of the two datasets used in the project: LRRo and LRW (both of them comprising recordings “in the wild”). Two other deep architectures (Multiple Tower and Inception-v4) were designed, implemented and evaluated. These new models turned out to perform better than the previously developed models (EF-3), which had nearly random performance. A new annotated dataset was collected. It comprises specific terms, recorded in a controlled environment. The goal is to use this dataset to enable the system to respond to predefined keywords. The speech transcription and keyword spotting (from speech) modules were extended and enhanced. New deep learning architectures, based on Time Delay Neural Networks (TDNN), were evaluated and integrated in the speech transcription system, while the keyword spotting module was enhanced with new text processing (lemmatization) and search mechanisms. These latter methods were evaluated on a new dataset, also developed within the project.

3.7. Development of data source integration solutions (proprietary formats, open-source formats, video, audio, thermal, infrared, depth, social networks and Internet, metadata, etc.) (stage II)

Several techniques along with design and elaboration methods have been studied, to serve as a starting point for the implementation of the final solution. The main source data is audio, visible and multispectral video (streaming and containers). Also, the main technologies for connection to social networks for web mining have been identified. The main subsystems which are the final solution are grouped more or less around technologies like ONVIF, RTSP, and PostgreSQL as employed database. The technical solutions adopted allow: the development of a 100% scalable modular system, which can be permanently improved and extended without the need to redesign it and which can be easily used and integrated into complex applications of intelligent video analysis, innovative video surveillance and finally, the integration with complex security systems.

3.8. Testing and optimization of video-audio processing and analysis algorithms

Algorithms optimization: (i) automatic lip reading – the best performances were achieved using the Inception-V4 model with the newest variant of the LRRo dataset, with an accuracy of 64%; ii) the crowd detection and behaviour analysis – we achieved a slight improvement in regards to processing time performances, while maintaining the system accuracy, iii) emotion detection from sound – the best performances were obtained with MLP type networks, with an accuracy of 88,7%; (iv) speech and speaker recognition – the utilization of hand-crafter features increased the system’s performances, in contrast to the sinc type of features, achieving significantly better results for the traditional audio features, computed with small stride and smaller signal windows than reported in the literature, (v) speech to text translation – the simple TDNN acoustic model produces better results than the other acoustic models architectures, the best results were achieved with an WER score of 2.79% for reading speech, in controlled conditions, in contrast to spontaneous speech in a non controlled environment (signal being affected by noise) with a WER score of 16%, (vi) key words retrieval – the methodology in 2 steps, namely transcription and words of interest query is more efficient, the best performer being the method based on DTW, with an F-score of 86,1% on RAV transcription, achieved from the unidentified rate of 11.7% and the false alarm rate of 14,5%; (vii) face and body based person search – the best trade-off between performance and processing time were achieved with the ResNet deep network, with 50 layers, with 5 aspect ratios and 6 different sizes; (viii) automatic object detection – the best results were achieved with the ResNetXt101 deep network, but with a higher processing time, the best trade-off, in this context, was achieved with the ResNet architectures with 50 or 101 layer for features extraction, in tandem with the features maps processed in a hierarchical way based on a FPN network; (ix) emotion detection from images and video streams – the best results are achieved by using specialized networks for each scenario, namely: ResNet with 50 layers for global expression recognition, and VGG with 16 layers, for action units, with an accuracy of 85%; (x) dissimulated expression identification – the approach is affected by the lack of sufficient professional annotated data, therefore, with the existing data, we achieved a maximum accuracy of 55%.

3.9. Development of the pilot for the integrated person identity validation system

3.10. Development of the pilot for the integrated dissimulated behaviour interpretation system

3.11. Development of the pilot for the integrated speech interpretation system

We integrated the three pilot systems: eProfiler, eSeeming and eTalk. We developed and validated the following algorithms: person re-identification, speaker identification and verification, facial expression recognition, emotional content recognition and detection of dissimulated behaviour, detection of crowds and their abnormal behaviour, suspicious object detection, violence detection, speech-to-text transcription, spoken keyword detection, security related vocabulary detection. These algorithms were integrated in the SPIA-VA platform, thus ensuring a centralized management of multimedia data, centralized and unitary management for the extracted metadata and security events. Several use scenarios were implemented: real-time analysis, forensics scenario and dedicated session processing. Functionality was demonstrated via integration tests. Both the central software platform and the algorithms are developed as separate libraries or software components that can operate and integrate together, thus offering the possibility of easily upgrading the system, both during the test period and during the entire product lifetime, based on the feedback given by users and on the retraining processes of the algorithms for new and varied scenarios.

3.12. Development of the distributed parallel processing solution

The distributed parallel processing capabilities of the SPIA-VA platform were extended in order to allow running multiple processing nodes and multiple processes per each node. Each node can run one or more multiple independent processes and we ensured that the addition of new modules is an easy task, using standard industry technologies. Furthermore each node can process one or more data sources or multiple processes per multiple data sources. This ensures a great degree of setup flexibility, making the system easily adaptable to many use case scenarios. The systems were fully integrated into the KVision platform, developed by the UTI Group partner

3.13. Development and implementation of the integrated solution, the user graphic interface and use-case scenarios and decisional support modules

The graphic interface for the centralized platform has been developed, such that it allows: centralized audio and video data management, centralized management of processing instances, centralized metadata management, friendly, unitary and ergonomic visualization of data which are of interest to the operator. Dedicated menus have been developed, new functions were added to the already existing ones and the interface has been optimized. The decompression and rendering parts have been optimized for graphical processing units, making the smooth playback of a large number of video sources possible.

3.14. Development of the connector/module responsible for providing data to other 3rd party applications

There has been developed the interconnecting module between SPIA-VA’s integrated platform and 3rd party applications. The interconnecting module’s main functionalities are to use input data obtained from different modules, under different formats, process them internally under a given multimedia (audio + video) and metadata format and send them to the output towards other 3rd party applications, usually under a ONVIF compliant format. In order to achieve the interconnecting module specifications, a ONVIF device management software model was used, written in C#, which uses FFmpeg format for media decoding. The ONVIF device management application implements similar and useful functions for the project, such as discovery and recognition of ONVIF devices, media, imagery, intelligent network video processing, events and PTZ services.

3.16. Development and implementation of data security solutions

A data security module has been developed, composed of two cryptographic libraries implemented in C programming language, which can offer all the cryptographic support necessary to ensure the confidentiality, integrity and non-repudiation of the data carried by the integrated SPIA-VA system. The module contains symmetrical cryptographic algorithms for encrypting any type of data, regardless of their length, hash-type algorithms for calculating and verifying data integrity and also for implementing digital signatures, a protocol for establishing cryptographic keys to ensure an efficient and safe cryptographic key management, and secure and asymmetric cryptographic algorithms for signature, signature verification and symmetric cryptographic keys establishment.

3.17. Development of integrated management software platform

There have been developed functions for the acquisition integrated platform, management, and processing and exploitation of audio-video and connected data, related to security scenarios. There have been developed modules dedicated to the operational scenarios, identified with the beneficiary authority, seeking, in the same time, the understanding of the modalities in which these processing techniques can be enhanced, and their implementation in a functional final product. As a result of the experiments, there have been identified and implemented: data acquisition methods from different sources, the implementation of algorithms in a unitary platform, the processing of the results from the processed instances, centralized or distributed, in a unitary interface, and the visualization and the unitary exploitation of the results.

3.18. Research, development and implementation of data / system fusion algorithms (TRL4)

Several late fusion methods based on deep neural networks were developed and validated. The proposed DNN architecture is composed of a variable number of fully connected layers, followed by batch normalization layer and a final fully connected decision layer. The inherent disadvantage of late fusion systems, i.e., an increase in system requirements created by the need to run multiple inductor systems for each multimedia sample, is, on the other hand, balanced by the drastic increase in performances brought on by the implementation of late fusion DNNs. The performances recorded by the late fusion systems almost doubled individual inductor performance, thus proving the advantages of such an approach.

3.19. Research, development and implementation of information search and "relevance feedback"-based algorithms (TRL4)

Various methods of refining search results have been studied and evaluated, using Relevance Feedback Techniques based on classical algorithms (Rocchio, FRE and SVM). In this direction we proposed the adaptation of them so that they can run on the test dataset in the context of relevance. Also, a graphical interface was built which helped to better analyze and interpret the results obtained. Unfortunately, the Relevance Feedback module described cannot be used practically due to the new regulations imposed by the Social Media platforms.

4.1. Elaborating and documenting the testing scenarios and evaluation metrics for the algorithms and the dedicated software modules

During this activity we elaborated and documented the testing scenarios, based on information collected from the Beneficiary and the project documentation developed in the previous stages of this project. We identified and described the following use case scenarios: person identity validation (eProfiler): 1st scenario - crowd behaviour analysis, 2nd scenario - person identification in a crowd, 3rd scenario - person identification on multiple cameras, 4th scenario - action and behaviour recognition, 5th scenario - dangerous object detection and classification; simulated behaviour interpretation (eSeeming): 6th scenario - microgesture detection in a “waiting room” scenario, 7th scenario - emotion recognition in a “waiting room” scenario, 8th scenario - emotion recognition based on speech patterns (intensity / variation / vibration) in an “interview room” scenario, 9th scenario - emotion recognition in a “access-control point” scenario; automatic speech interpretation (eTalk): 10th scenario - speech to text translation, 11th scenario - speaker verification, 12th scenario - speaker identification and 13th scenario - lip reading from visual footage. We identified the most appropriate evaluation metrics for testing these scenarios.

4.2. Elaborating and documenting the testing scenarios for the integrated solution

During this activity we elaborated the testing scenarios for the integrated solution and the functionality validation procedures. The solution was developed as an integrated platform, designed to process audio/video data flows, from different sources and transmit the processing results generated by the artificial intelligence modules to the user. Application testing and algorithm result validation specifications were developed for each of the identified scenarios. Testing scenarios were elaborated for all the functionalities that the platform integrates, including: basic functionalities like login and application settings configuration, functionalities describing the management of data sources and integrated algorithms such as adding and deleting media sources and processing algorithms, editing their settings, linking data sources with algorithms, and functionalities dealing with the generation of alarms and notifications.

4.3. Testing and optimizing the integrated system for person identification

During this activity, we have tested, optimized, and integrated the person identification system, the object recognition system, the violence detection system, and the crowd detection system, respectively, leading to a set of optimal parameters. The systems were successfully transferred into the integrated platform, and the utilization instructions were elaborated.

4.4. Testing and optimizing the integrated system for interpreting simulated behavior

During this activity, we have tested, optimized, and integrated the emotion recognition system from video and audio streams, for identifying the dissimulated behavior, leading to a set of optimal parameters. The systems were successfully transferred into the integrated platform, and the utilization instructions were elaborated.

4.5. Testing and optimization of the integrated system for speech analysis

During this activity, we have tested, optimized, and integrated the speech-to-text transcription system, the key-words retrieval from speech system, the speaker recognition system, and the lip-reading system, respectively, leading to a set of optimal parameters. The systems were successfully transferred into the integrated platform, and the utilization instructions were elaborated.

4.6. Testing of the integrated platform and the data interconnection/fusion module

During this activity, we have developed an integrated platform, aiming to interconnect a set of modules and to perform the transfer of the data. This platform was developed to allow audio and video data stream processing, from the moment of receiving these data from various sources to the moment of transmitting the generated results after the processing of the data. Through this platform, we aimed to create a software application that allows the user to obtain the desired results in an easy and efficient way. Moreover, through this platform, the user can perform all the required operations on the data streams. Therefore, the user can open one or more media sources, either from media files or from live streams such as web cameras. These data streams can be analyzed with one or more audio and video data processing algorithms. One of the most important advantages of this platform is its capacity to integrate more algorithms with different purposes, in a single module. Finally, through the platform, alarms will be transmitted to the user after receiving the results of the running algorithms.

4.7. Analysis and documentation of the system performance level, optimization / improvement necessities, limitations

During this activity, the level of performance reached by each module and integrated platform was analyzed. The proposed solution is composed of several systems, which approach the problem from three different angles: person identity validation, simulated behavior analysis and speech interpretation. Each of these systems deals with several aspects of the main theme using a series of specific algorithms. Taking into account these aspects, the performance presentation was approached as follows: for each module or component within the three presented systems, a short description was made, including the main objectives, the algorithms that were used and the datasets for which the tests were performed. The obtained results were presented taking into account the specificity of each module/component. The possibilities to improve the approached methods are found for each module/component separately. The limitations of the developed systems are closely related to the conditions under which the tests were performed. The platform developed within the project integrates all the presented modules offering functionalities that allow users to use the results of the modules/components in different operational scenarios. For this, data which was captured in real-time or stored offline can be used. We can also set certain threshold values and generate notifications or alarms. As certain algorithms require a considerable amount of resources, the platform offers the possibility of parallel and distributed processing. Developed on a modular architecture, it can be configured according to needs, thus offering the possibility to improve performance.

4.8. Elaborating the technical documentation for the realization / presentation / usage of the pilot model

During this activity, the technical documentation for the realization, presentation and usage of the pilot model was elaborated, in order to draw up a part of the technical documentation of the integrated platform: technical execution documentation: the realization of the pilot platform, for the integrated interface, starting from the already existing KVision solution on VMS platform with basic functionalities, and was completed by adding new modules, functions, algorithms and extended functionalities, as well new display formats; technological documentation: contains all the necessary steps for the installation and configuration of the pilot module, until its commissioning; the technical presentation documentation contains a general description of the integrated platform; the installation manual: contains the preliminary requirements necessary for the installation of the pilot model modules and describes the actual procedures for the actual installation and uninstallation of the basic software modules that make up the platform; administration manual: contains the way in which a user with administrator role unitarily manages all the permissions on the platform, through a web browser; operation manual: contains the way in which a user with the role of operator who sums up all the permissions controls the platform operations; user manual: contains information about how to add normal users to access the system from the administrator account and how to create mobile users to perform the function of accessing the system through mobile terminals.